Getting Started

This section contains the tutorials for llmaz.

less than a minute

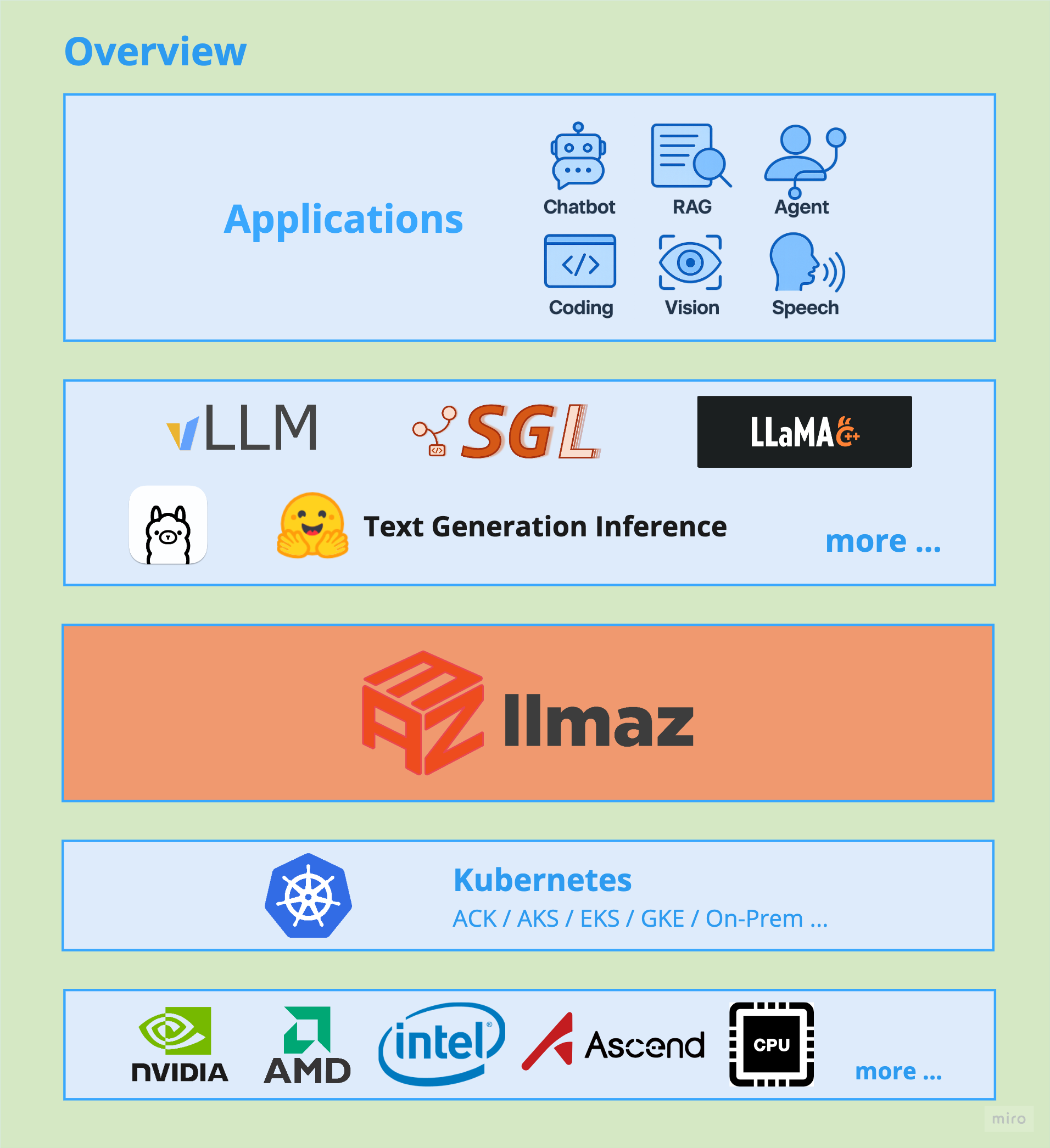

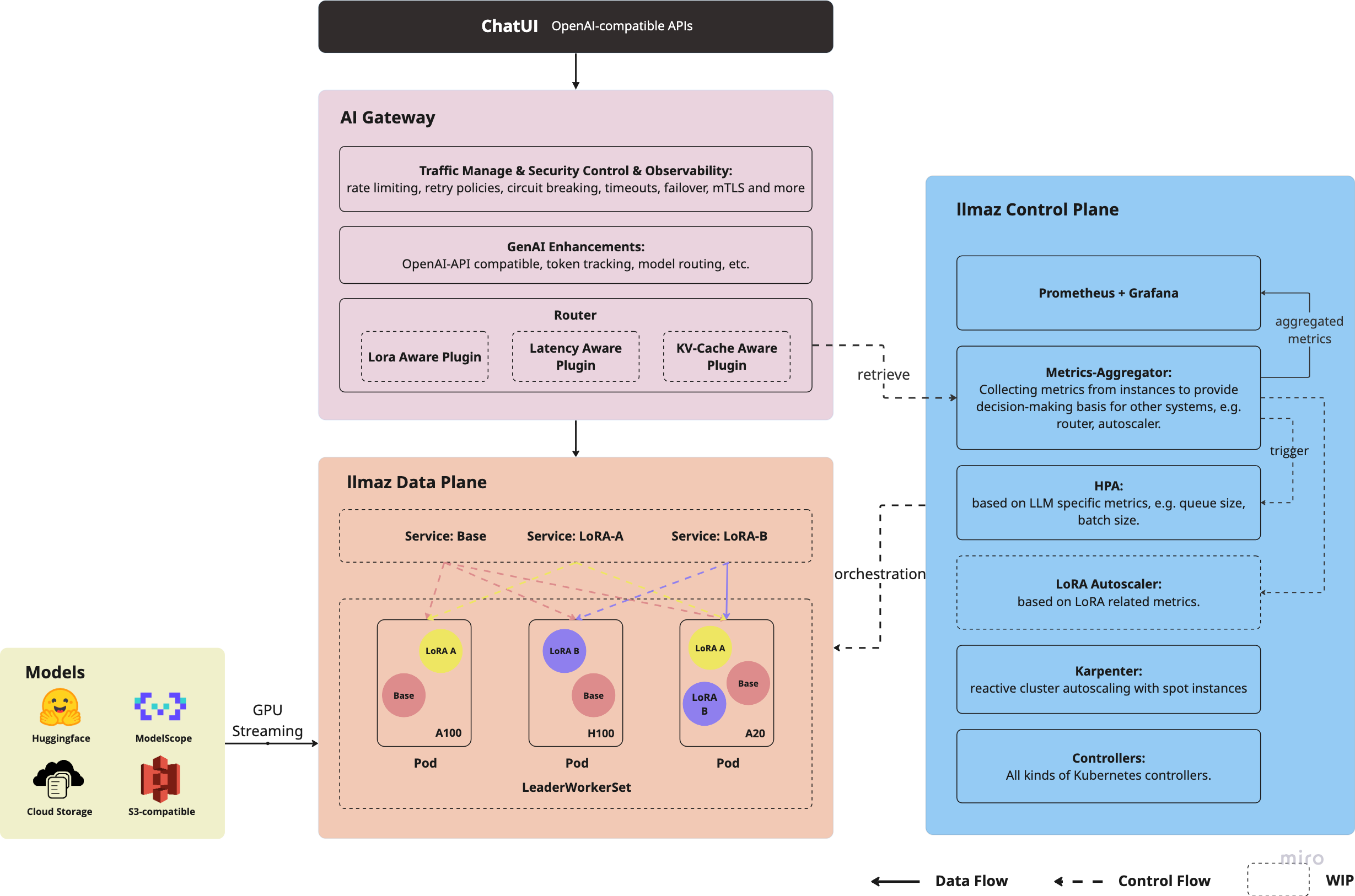

llmaz (pronounced /lima:z/), aims to provide a Production-Ready inference platform for large language models on Kubernetes. It closely integrates with the state-of-the-art inference backends to bring the leading-edge researches to cloud.

This section contains the tutorials for llmaz.

This section contains the advanced features of llmaz.

This section contains the llmaz integration information.

This section contains a develop guidance for people who want to learn more about this project.

This section contains the llmaz reference information.

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.